[Case Study] On-Premise AI Chat Implementation with Local LLM for Enhanced Data Analysis and Managerial Decision Support in the LadiesGym Network

Client

Project goal

The objective of the project was to deploy an on-premises corporate chat solution featuring a local LLM model to support data analysis and managerial decision-making within the gym network. This solution was designed to enhance data processing capabilities, improve decision support, and ensure data security by keeping sensitive information on-site. Additionally, the system was intended to complement remote models through prompt tokenization for versatile integration.

Solution

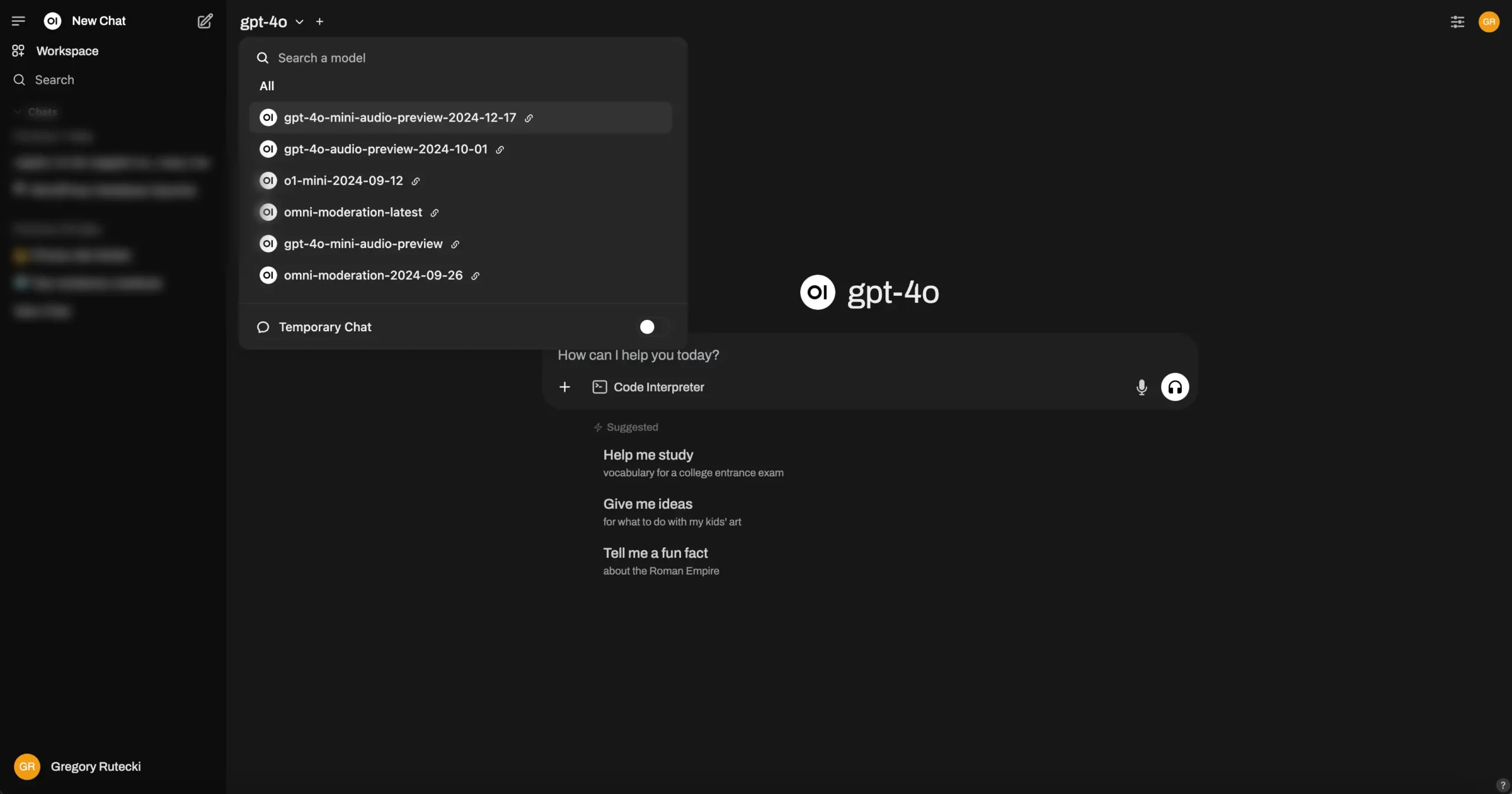

We implemented the solution by deploying an on-premises stack on a cloud machine equipped with NVIDIA CUDA for local operation of the large LLM model Llama3.3. The setup also provides access to remote models based on prompt tokenization. Our deployment was built on a stack comprising OpenWebUI, Ollama, and Llama3.3, with significant optimization of the hosting machine to ensure robust performance and scalability.

Tech

Development

Team

1) Business Consultant

2) Technical Consultant

3) Mid Python Developer

4) IT Support Engineer

Budget

20-40k USD +

Project year

Q1 2025